Algorithms around the world work in the service of discrimination

Potential abuses are embedded in technologies. We are tempted with a promise of security or greater convenience. The easier it is for her to agree that the cost is often borne by someone else, a group that is already discriminated against anyway. Uighurs, Muslims, blacks, women. This is happening all over the world from China to the United States.

You do not have to be afraid anymore. Our face recognition system will identify Ujgur, a Tibetan or other suspect group representative. He will be watching him closely. Nagra's actions. He will check where he came from and where he went. If it turns out that there are more of them in the area, it will send an alarm signal to the legal representatives. You will come and master the situation.

This is how the Chinese startup CloudWalk advertises on its website. It gives Chinese citizens security and protection from suspected groups. Automates racism.

Uyghors on the cameras of the People's Republic of China .

Uighurs are mostly Muslim minorities. It is estimated that there are around 11 million of them in China. They live mainly in the Xinjiang-Uyghur Autonomous Region. This is where the camps are located, in which the Uyghurs are closed for weeks or months and subjected to indoctrination. They listen to talks about the only justness, the only right party, sing hymns in her honor and write critical essays in which they analyze their own faults. The biggest one. Confessing Islam. You can go to the camp for reciting the Koran at a funeral.

China denied that these are re-education camps. Officials rather talk about corrective institutions that are supposed to prevent the spread of extremism and offer vocational training. The accounts of witnesses contradict these declarations.

Uyghurs have slightly different features than most Chinese Han and closer to their appearance to the inhabitants of Central Asia, it significantly facilitates their recognition. As The New York Times reports , the authorities in the Middle Kingdom use an advanced face recognition system that aims to track Uighurs. Experts are beating the alarm. The state uses the latest achievements of civilization on a large scale to target citizens belonging to a selected minority, monitor their behavior and exercise close control over them. Some witnesses report that cameras are installed even in private homes.

One, two, three Xi Jinping is watching.

The Chinese government is not afraid of excessive monitoring . The network of cameras covers the country more and more. Never wearing eyes lenses scan the streets, pedestrian crossings, bazaars. They look for faces whose characteristics are able to recognize. They are looking for Uighurs. A major part of the camera system equipped with a minority recognition system is located in western Sinciang, but the government is slowly embracing the largest cities in eastern China as well.

Technology is not yet advanced enough to rely entirely on it. Not all cameras are quite good quality, systems do not work properly in poor lighting conditions. They are fallible, but that does not stop you applying for them. The NYT reached documents that show that at the beginning of 2018 more than two dozen police units all over China applied for access to this technology. China uses a facial recognition system like a weapon whose optical blade is directed against a minority living in a country.

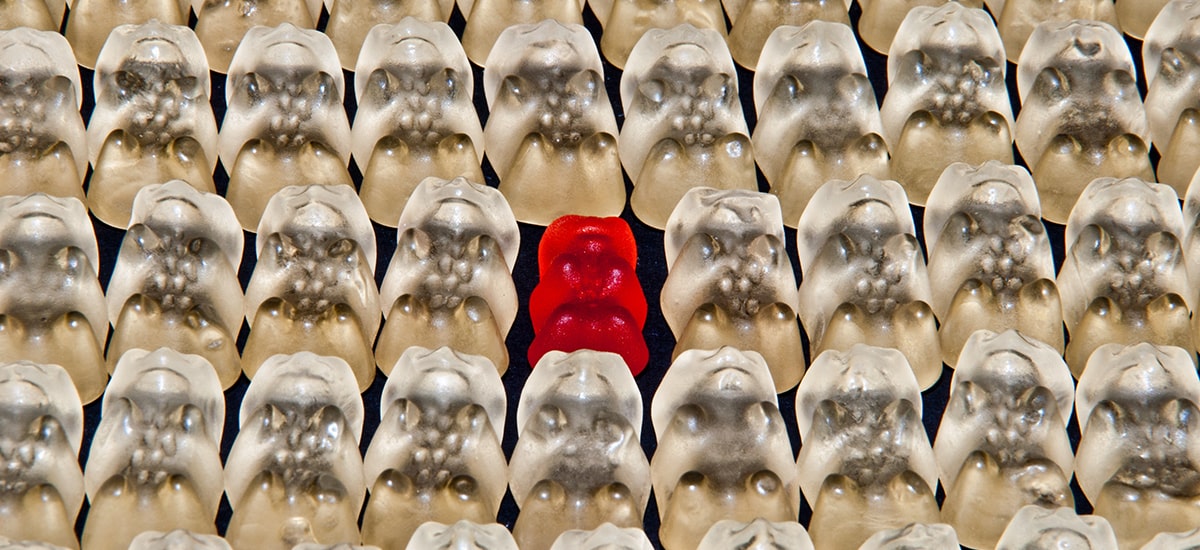

The minority and the minority in the crosshairs can not feel safe. Not only in China.

Do the police dream about a minority report?

Red point, hot zone, danger zone. As he called, so important, it is important that he gives clear information. Predpol shows places where a crime is likely to be committed within 12 hours. Police patrols are sent there. Another program allows you to make a list of potential recidivists. People marked as potentially dangerous are carefully monitored. The police have their eyes on them.

Prevention based on technological solutions is becoming more and more popular. After all, who would not want crimes to be stopped rather than prosecuted? This is promised by modern police assistance applications. The effectiveness of services and security for citizens. The problem is that hardly anyone checks to what extent, if any, they keep this promise.

LAPD chief Michel Moore announced last week that he was completing a program to identify and monitor criminals. Others will remain, but their operation will be better monitored. This is the result of a report published last month, which was under the microscope used by the police in Los Angeles for prevention.

The self-fulfilling prophecy has great results.

The report shows that the withdrawn system suffered from basic errors. The criteria, which were to determine who is considered potentially dangerous, were inconsistent and thus gave difficult to assess results. In the evaluation of the program carried out by the inspector general of the police, it turned out that as many as 44 percent. people marked as potential recidivists have either never been arrested for a violent crime or have been arrested for once. In addition, many people on the list were on the recommendation of uniform, and not thanks to the calculation of algorithms. Just a policeman or detective acknowledged that someone should hit such a list and put him on it, and this already gives rise to abuse.

Regarding Predpol, the effects of this program are satisfactory according to the report. Detection of crimes in places designated by the algorithm is greater. And it could probably be considered a success if it were not for common sense. If you send more patrols to one place than anywhere else, then the chance of noting more crimes goes up. Where there are no patrols, there is no one to record.

The problem is that in practice these programs duplicate racial prejudice. Inflammation points are usually indicated in poorer neighborhoods inhabited by black and latin minorities. More patrols appear there. More patrols capture more potential criminals. The circle closes. Under Predpol, you can add various types of crimes. Smoking herbs, disturbing peace, wandering the street in a state far from sobriety.

What is worse, the police going to a district often described as dangerous even through theoretically objective and unbiased algorithms, confirm in their prejudices. The US police are known not only for many years of racial profiling, where data justifies racist behavior (you still have a better chance of being detained by a road patrol if you are black), but also of excessive tendency to use violence and reaching for weapons too quickly.

Confirming officers in the belief that these blacks need to be more controlled, pre-empowers systemic prejudice.

Discrimination entered into the input data becomes discrimination entered into the system. Not only countries and cities know about it, but also private companies.

Amazon's algorithms do not want women.

Amazon withdrew last year from using the program developed since 2014, which helped the HR department in recruitment. The program did its job too well. Eliminating half of humanity from the process undoubtedly facilitates work and reduces the problem of too large a pile of resumes to review.

The idea was simple. The tool was supposed to select the five best applications from all sent for a given position. The selection was based on Amazon employees' data. In the end, the HR department knew who in a dozen or so years got a job and checked the position. What looks like a reasonable tactic cemented the existing inequalities. The algorithm has learned that the best candidates have documented experience in the industry, several large projects behind the belt and penis.

As reported by Reuters , Amazon since 2015 knew that the algorithm in recruitment is guided by the rule of the gentlemen on the right, ladies to the basket. The company tried to fix the algorithm first and limit its reliance on it, but it did not resign from it until 2018. So far, it is not known what complex methodology the HR department used to undermine the algorithm's verdicts and convince the superiors to abandon it. It is suspected that he carried out observational studies and noted that most of the coding staff uses their fingers.

We need regulation, we can not afford to wait for a catastrophe to happen.

At the beginning of April, facial recognition researchers sent an open letter to Amazon . They want Jeff Bazos to stop selling his face recognition system to the police because he's just prone to mistakes. And it's mistakes that perpetuate discrimination based on sex and race. Amazon's stakeholder group wants the company to stop selling facial recognition tools until it is confirmed that it does not contribute to human rights violations. The shareholders will vote on the matter next month.

It's just a symbolic movement. The board recommends voting against this regulation, but even if the majority of shareholders decide to make life difficult for the board, little will change. Such a regulation has advisory power and is unlikely to be heard.

The problem is that the Amazon system does quite well with the recognition of white men, but when it comes to recognizing women or people with darker skin tones, it starts to get lost. The algorithm simply repeats inequalities in the data. Facial recognition systems created in the west deal best with white men, while those created in the east are the leaders in identifying Asians.

Amazon begins to talk about the need to regulate the use of face recognition solutions. Microsoft calls the legislators to look into the problem. Google does not sell such software, although it is growing. Everyone is suspended and waiting for what's next. The problem is that this question is answered by China.

If the technology can be abused, it will be abused.

It all boils down to the bookkeeping of profits, losses, potential chances and potential threats. You have to take into account the continuous arms race . If we do not do something, it does not mean that someone else will not do it. We need to observe, collect data, draw conclusions and actively look for dangers related to the development of technology. China can not remain for us a story about an evil dystopian state that we can look at from the moral superiority dunes. We need to relate to his problems realistically, because they are closer to us than it might seem.

Algorithms around the world work in the service of discrimination

Comments

Post a Comment